Medium-Sized Network: AV Behaviors & Collaborative MARL with TorchRL¶

In this tutorial we use a medium sized-network for agents navigation. The chosen origin and destination points are specified in this file, and can be adjusted by users. In parallel, we define AV behaviors based on the agents’ reward formulation and implement their learning process using the TorchRL library.

Network Overview¶

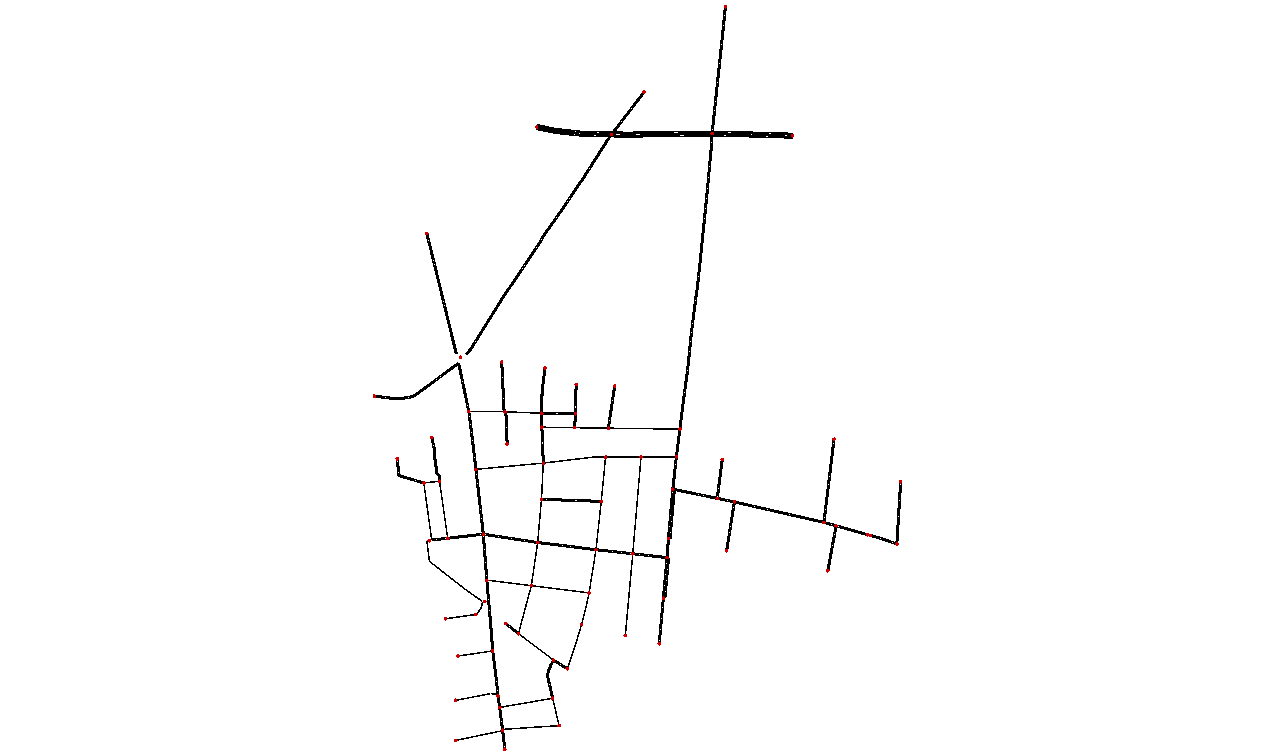

In these notebooks, we utilize the Cologne network within our simulator, SUMO. As an initial baseline, we employ malicious AV behavior, where all AVs share the same reward, making it an ideal setup for algorithms designed to solve collaborative tasks.

Users can customize parameters for the

TrafficEnvironmentclass by consulting therouterl/environment/params.jsonfile. Based on its contents, they can create a dictionary with their preferred settings and pass it as an argument to theTrafficEnvironmentclass.

Included Tutorials:¶

VDN Tutorial. Uses Value Decomposition Networks (VDN) for decentralized MARL training.

QMIX Tutorial. Implements QMIX (QMIX), which leverages a mixing network with a monotonicity constraint.

MAPPO Tutorial. Uses Multi-Agent Proximal Policy Optimization (MAPPO), the multi-agent adaptation of PPO (PPO).

Cologne Network Visualization¶

Defining Automated Vehicles Behavior Through Reward Formulations¶

As described in the paper, the reward function enforces a selected behavior on the agent. For an agent k with behavioral parameters φₖ ∈ ℝ⁴, the reward is defined as:

where Tₖ is a vector of travel time statistics provided to agent k, containing:

Own Travel Time (\(T_{\text{own}, k}\)): The amount of time the agent has spent in traffic.

Group Travel Time (\(T_{\text{group}, k}\)): The average travel time of agents in the same group (e.g., AVs for an AV agent).

Other Group Travel Time (\(T_{\text{other}, k}\)): The average travel time of agents in other groups (e.g., humans for an AV agent).

System-wide Travel Time (\(T_{\text{all}, k}\)): The average travel time of all agents in the traffic network.

Behavioral Strategies & Objective Weightings¶

Behavior |

ϕ₁ |

ϕ₂ |

ϕ₃ |

ϕ₄ |

Interpretation |

|---|---|---|---|---|---|

Altruistic |

0 |

0 |

0 |

1 |

Minimize delay for everyone |

Collaborative |

0.5 |

0.5 |

0 |

0 |

Minimize delay for oneself and one’s own group |

Competitive |

2 |

0 |

-1 |

0 |

Minimize self-delay & maximize delay for others |

Malicious |

0 |

0 |

-1 |

0 |

Maximize delay for the other group |

Selfish |

1 |

0 |

0 |

0 |

Minimize delay for oneself |

Social |

0.5 |

0 |

0 |

0.5 |

Minimize delay for oneself & everyone |